tl;dr: Don t just

apt install rustc cargo. Either do that and make sure to use only Rust libraries from your distro (with the tiresome config runes below); or, just use

rustup.

Don t do the obvious thing; it s never what you want

Debian ships a Rust compiler, and a large number of Rust libraries.

But if you just do things the obvious default way, with

apt install rustc cargo, you will end up using Debian s

compiler but

upstream libraries, directly and uncurated from crates.io.

This is not what you want. There are about two reasonable things to do, depending on your preferences.

Q. Download and run whatever code from the internet?

The key question is this:

Are you comfortable downloading code, directly from hundreds of upstream Rust package maintainers, and running it ?

That s what

cargo does. It s one of the main things it s

for. Debian s

cargo behaves, in this respect, just like upstream s. Let me say that again:

Debian s cargo promiscuously downloads code from crates.io just like upstream cargo.

So if you use Debian s cargo in the most obvious way, you are

still downloading and running all those random libraries. The only thing you re

avoiding downloading is the Rust compiler itself, which is precisely the part that is most carefully maintained, and of least concern.

Debian s cargo can even download from crates.io when you re building official Debian source packages written in Rust: if you run

dpkg-buildpackage, the downloading is suppressed; but a plain

cargo build will try to obtain and use dependencies from the upstream ecosystem. ( Happily , if you do this, it s quite likely to bail out early due to version mismatches, before actually downloading anything.)

Option 1: WTF, no I don t want curl bash

OK, but then you must limit yourself to libraries available

within Debian. Each Debian release provides a curated set. It may or may not be sufficient for your needs. Many capable programs can be written using the packages in Debian.

But any

upstream Rust project that you encounter is likely to be a pain to get working, unless their maintainers specifically intend to support this. (This is fairly rare, and the Rust tooling doesn t make it easy.)

To go with this plan,

apt install rustc cargo and put this in your configuration, in

$HOME/.cargo/config.toml:

[source.debian-packages]

directory = "/usr/share/cargo/registry"

[source.crates-io]

replace-with = "debian-packages"

This causes cargo to look in

/usr/share for dependencies, rather than downloading them from crates.io. You must then install the

librust-FOO-dev packages for each of your dependencies, with

apt.

This will allow you to write your own program in Rust, and build it using

cargo build.

Option 2: Biting the curl bash bullet

If you want to build software that isn t specifically targeted at Debian s Rust you will probably

need to use packages from crates.io,

not from Debian.

If you re doing to do that, there is little point not using

rustup to get the latest compiler. rustup s install rune is alarming, but cargo will be doing exactly the same kind of thing, only worse (because it trusts many more people) and more hidden.

So in this case:

do run the

curl bash install rune.

Hopefully the Rust project you are trying to build have shipped a

Cargo.lock; that contains hashes of all the dependencies that

they last used and tested. If you run

cargo build --locked, cargo will

only use those versions, which are hopefully OK.

And you can run

cargo audit to see if there are any reported vulnerabilities or problems. But you ll have to bootstrap this with

cargo install --locked cargo-audit; cargo-audit is from the

RUSTSEC folks who do care about these kind of things, so hopefully running their code (and their dependencies) is fine. Note the

--locked which is needed because

cargo s default behaviour is wrong.

Privilege separation

This approach is rather alarming. For my personal use, I wrote a privsep tool which allows me to run all this upstream Rust code as a separate user.

That tool is

nailing-cargo. It s not particularly well productised, or tested, but it does work for at least one person besides me. You may wish to try it out, or consider alternative arrangements.

Bug reports and patches welcome.

OMG what a mess

Indeed. There are large number of technical and social factors at play.

cargo itself is deeply troubling, both in principle, and in detail. I often find myself severely disappointed with its maintainers decisions. In mitigation, much of the wider Rust upstream community

does takes this kind of thing very seriously, and often makes good choices.

RUSTSEC is one of the results.

Debian s technical arrangements for Rust packaging are quite dysfunctional, too: IMO the scheme is based on fundamentally wrong design principles. But, the Debian Rust packaging team is dynamic, constantly working the update treadmills; and the team is generally welcoming and helpful.

Sadly last time I explored the possibility, the Debian Rust Team didn t have the appetite for more fundamental changes to the

workflow (including, for example,

changes to dependency version handling). Significant improvements to upstream cargo s approach seem unlikely, too; we can only hope that eventually someone might manage to supplant it.

edited 2024-03-21 21:49 to add a cut tag

comments

This blog post shares my thoughts on attending Kubecon and CloudNativeCon 2024 Europe in Paris. It was my third time at

this conference, and it felt bigger than last year s in Amsterdam. Apparently it had an impact on public transport. I

missed part of the opening keynote because of the extremely busy rush hour tram in Paris.

On Artificial Intelligence, Machine Learning and GPUs

Talks about AI, ML, and GPUs were everywhere this year. While it wasn t my main interest, I did learn about GPU resource

sharing and power usage on Kubernetes. There were also ideas about offering Models-as-a-Service, which could be cool for

Wikimedia Toolforge in the future.

See also:

On security, policy and authentication

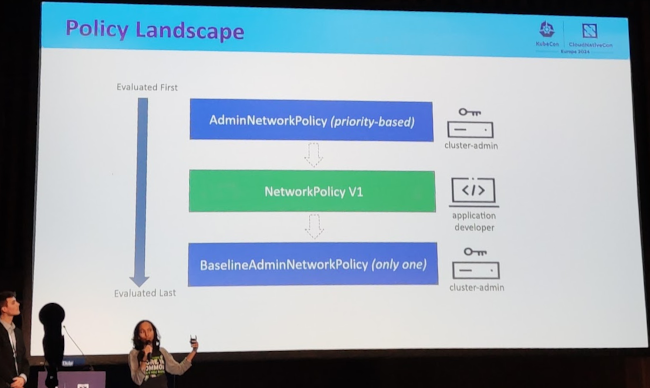

This was probably the main interest for me in the event, given Wikimedia Toolforge was about to migrate away from Pod

Security Policy, and we were currently evaluating different alternatives.

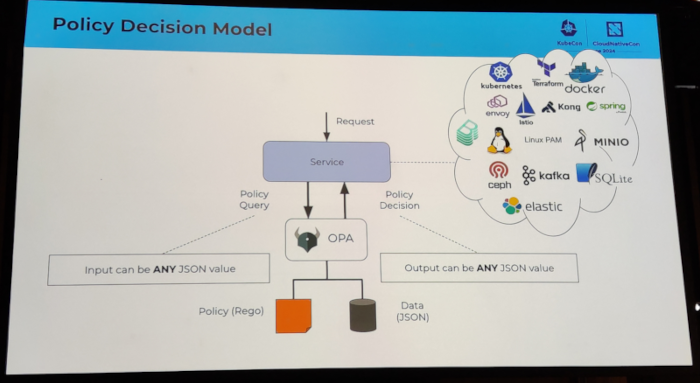

In contrast to my previous attendances to Kubecon, where there were three policy agents with presence in the program

schedule, Kyverno, Kubewarden and OpenPolicyAgent (OPA), this time only OPA had the most relevant sessions.

One surprising bit I got from one of the OPA sessions was that it could work to authorize linux PAM sessions. Could this

be useful for Wikimedia Toolforge?

This blog post shares my thoughts on attending Kubecon and CloudNativeCon 2024 Europe in Paris. It was my third time at

this conference, and it felt bigger than last year s in Amsterdam. Apparently it had an impact on public transport. I

missed part of the opening keynote because of the extremely busy rush hour tram in Paris.

On Artificial Intelligence, Machine Learning and GPUs

Talks about AI, ML, and GPUs were everywhere this year. While it wasn t my main interest, I did learn about GPU resource

sharing and power usage on Kubernetes. There were also ideas about offering Models-as-a-Service, which could be cool for

Wikimedia Toolforge in the future.

See also:

On security, policy and authentication

This was probably the main interest for me in the event, given Wikimedia Toolforge was about to migrate away from Pod

Security Policy, and we were currently evaluating different alternatives.

In contrast to my previous attendances to Kubecon, where there were three policy agents with presence in the program

schedule, Kyverno, Kubewarden and OpenPolicyAgent (OPA), this time only OPA had the most relevant sessions.

One surprising bit I got from one of the OPA sessions was that it could work to authorize linux PAM sessions. Could this

be useful for Wikimedia Toolforge?

I attended several sessions related to authentication topics. I discovered the keycloak software, which looks very

promising. I also attended an Oauth2 session which I had a hard time following, because I clearly missed some additional

knowledge about how Oauth2 works internally.

I also attended a couple of sessions that ended up being a vendor sales talk.

See also:

On container image builds, harbor registry, etc

This topic was also of interest to me because, again, it is a core part of Wikimedia Toolforge.

I attended a couple of sessions regarding container image builds, including topics like general best practices, image

minimization, and buildpacks. I learned about kpack, which at first sight felt like a nice simplification of how the

Toolforge build service was implemented.

I also attended a session by the Harbor project maintainers where they shared some valuable information on things

happening soon or in the future , for example:

I attended several sessions related to authentication topics. I discovered the keycloak software, which looks very

promising. I also attended an Oauth2 session which I had a hard time following, because I clearly missed some additional

knowledge about how Oauth2 works internally.

I also attended a couple of sessions that ended up being a vendor sales talk.

See also:

On container image builds, harbor registry, etc

This topic was also of interest to me because, again, it is a core part of Wikimedia Toolforge.

I attended a couple of sessions regarding container image builds, including topics like general best practices, image

minimization, and buildpacks. I learned about kpack, which at first sight felt like a nice simplification of how the

Toolforge build service was implemented.

I also attended a session by the Harbor project maintainers where they shared some valuable information on things

happening soon or in the future , for example:

I very recently missed some semantics for limiting the number of open connections per namespace, see Phabricator

T356164: [toolforge] several tools get periods of connection refused (104) when connecting to

wikis This functionality should be available in the lower level tools, I

mean Netfilter. I may submit a proposal upstream at some point, so they consider adding this to the Kubernetes API.

Final notes

In general, I believe I learned many things, and perhaps even more importantly I re-learned some stuff I had forgotten

because of lack of daily exposure. I m really happy that the cloud native way of thinking was reinforced in me, which I

still need because most of my muscle memory to approach systems architecture and engineering is from the old pre-cloud

days. That being said, I felt less engaged with the content of the conference schedule compared to last year. I don t

know if the schedule itself was less interesting, or that I m losing interest?

Finally, not an official track in the conference, but we met a bunch of folks from

Wikimedia Deutschland. We had a really nice time talking about how

wikibase.cloud uses Kubernetes, whether they could run in Wikimedia Cloud Services, and why

structured data is so nice.

I very recently missed some semantics for limiting the number of open connections per namespace, see Phabricator

T356164: [toolforge] several tools get periods of connection refused (104) when connecting to

wikis This functionality should be available in the lower level tools, I

mean Netfilter. I may submit a proposal upstream at some point, so they consider adding this to the Kubernetes API.

Final notes

In general, I believe I learned many things, and perhaps even more importantly I re-learned some stuff I had forgotten

because of lack of daily exposure. I m really happy that the cloud native way of thinking was reinforced in me, which I

still need because most of my muscle memory to approach systems architecture and engineering is from the old pre-cloud

days. That being said, I felt less engaged with the content of the conference schedule compared to last year. I don t

know if the schedule itself was less interesting, or that I m losing interest?

Finally, not an official track in the conference, but we met a bunch of folks from

Wikimedia Deutschland. We had a really nice time talking about how

wikibase.cloud uses Kubernetes, whether they could run in Wikimedia Cloud Services, and why

structured data is so nice.

Turns out that VPS provider Vultr's

Turns out that VPS provider Vultr's

I recently became a maintainer of/committer to

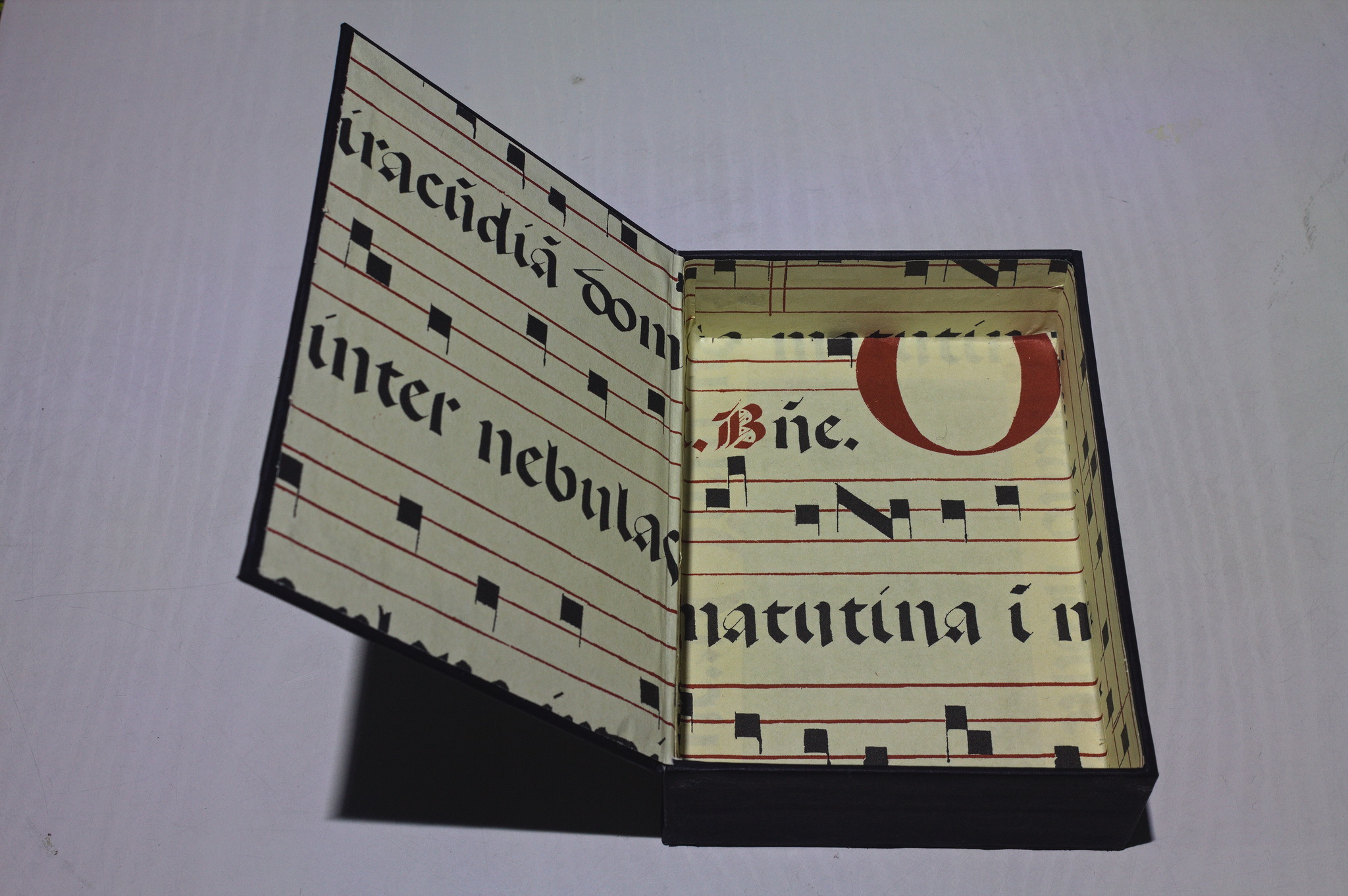

I recently became a maintainer of/committer to  Thanks to All Saints Day, I ve just had a 5 days weekend. One of those

days I woke up and decided I absolutely needed a cartonnage box for the

cardboard and linocut

Thanks to All Saints Day, I ve just had a 5 days weekend. One of those

days I woke up and decided I absolutely needed a cartonnage box for the

cardboard and linocut  One of the boxes was temporarily used for the plastic piecepack I got

with the

One of the boxes was temporarily used for the plastic piecepack I got

with the  A few days ago

A few days ago  One of the most common fallacies programmers fall into is that we will jump

to automating a solution before we stop and figure out how much time it would even save.

In taking a slow improvement route to solve this problem for myself,

I ve managed not to invest too much time

One of the most common fallacies programmers fall into is that we will jump

to automating a solution before we stop and figure out how much time it would even save.

In taking a slow improvement route to solve this problem for myself,

I ve managed not to invest too much time A welcome sign at Bangkok's Suvarnabhumi airport.

A welcome sign at Bangkok's Suvarnabhumi airport.

Bus from Suvarnabhumi Airport to Jomtien Beach in Pattaya.

Bus from Suvarnabhumi Airport to Jomtien Beach in Pattaya.

Road near Jomtien beach in Pattaya

Road near Jomtien beach in Pattaya

Photo of a songthaew in Pattaya. There are shared songthaews which run along Jomtien Second road and takes 10 bath to anywhere on the route.

Photo of a songthaew in Pattaya. There are shared songthaews which run along Jomtien Second road and takes 10 bath to anywhere on the route.

Jomtien Beach in Pattaya.

Jomtien Beach in Pattaya.

A welcome sign at Pattaya Floating market.

A welcome sign at Pattaya Floating market.

This Korean Vegetasty noodles pack was yummy and was available at many 7-Eleven stores.

This Korean Vegetasty noodles pack was yummy and was available at many 7-Eleven stores.

Wat Arun temple stamps your hand upon entry

Wat Arun temple stamps your hand upon entry

Wat Arun temple

Wat Arun temple

Khao San Road

Khao San Road

A food stall at Khao San Road

A food stall at Khao San Road

Chao Phraya Express Boat

Chao Phraya Express Boat

Banana with yellow flesh

Banana with yellow flesh

Fruits at a stall in Bangkok

Fruits at a stall in Bangkok

Trimmed pineapples from Thailand.

Trimmed pineapples from Thailand.

Corn in Bangkok.

Corn in Bangkok.

A board showing coffee menu at a 7-Eleven store along with rates in Pattaya.

A board showing coffee menu at a 7-Eleven store along with rates in Pattaya.

In this section of 7-Eleven, you can buy a premix coffee and mix it with hot water provided at the store to prepare.

In this section of 7-Eleven, you can buy a premix coffee and mix it with hot water provided at the store to prepare.

Red wine being served in Air India

Red wine being served in Air India

A first revision of the still only one-week old (at

A first revision of the still only one-week old (at  Last week we held our promised miniDebConf in Santa Fe City, Santa Fe province,

Argentina just across the river from Paran , where I have spent almost six

beautiful months I will never forget.

Last week we held our promised miniDebConf in Santa Fe City, Santa Fe province,

Argentina just across the river from Paran , where I have spent almost six

beautiful months I will never forget.

I like using one machine and setup for everything, from serious development work to hobby projects to managing my finances. This is very convenient, as often the lines between these are blurred. But it is also scary if I think of the large number of people who I have to trust to not want to extract all my personal data. Whenever I run a

I like using one machine and setup for everything, from serious development work to hobby projects to managing my finances. This is very convenient, as often the lines between these are blurred. But it is also scary if I think of the large number of people who I have to trust to not want to extract all my personal data. Whenever I run a